Paper总结 - Relational Representation Learning for Dynamic (Knowledge) Graph: A Survey

This paper did a detailed survey on Representation Learning on (Knowledge) Graph. It gives a valid devolopment progress on static KGRL and makes a summary on approaches of Dynamic Graph Representation Learning. They describes existing models from an encode-decode perspective and categorize these encoders and decoders based on the techniques they employ.

And in the end they highlighr directions for future research.

Firtly I want present the devolope line of KGRL. (Understanding big picture of KGRL is very necessary!!)

In this section I present several definations:

Dynamic Graph (Not KG):

- Continuous-time dynamic graph (\(CTDG\)): \(CTDG = (G,O)\), \(G\) representes graph at time \(t_1\), \(O\) represents several observations occur after \(t_1\). This defination of dynamic graph can capture all the changes happened over time.

- Discrete-time dynamic graph (\(DTDG\)): \(DTDG = (G_1, G_2, ..., G_n)\). Each \(G_i\) is a snapshot and representes graph at time \(i\). \(DTDG\) may not capture all the changes on a graph because there may be a time interval between two adjacent snapshots.

We call both \(CTDG\) and \(DTDG\) dynamic graph. Most existing models for dynamic learning is on \(DTDG\) because it is easier.

KGs: I think I am a bit of familiar with.

General Tasks for Dynamic graphs:

- Node classification

- Graph complement/Link prediction: main task of KGs

- Graph classification

Streaming scenario: A model may not have enough time to retrain compeletly or in part when new observations arrive. Streaming scernarios are often best handled by \(CTDGs\).

Encoder-decoder framework for static graph and KGs:

Decoders for static graph: very simple

- average of two vectors

- element-wise multiplication of two vectors

- element-wise absolute value of the difference of the two vectors

- elemet-wise squared value of the difference of the two vectors

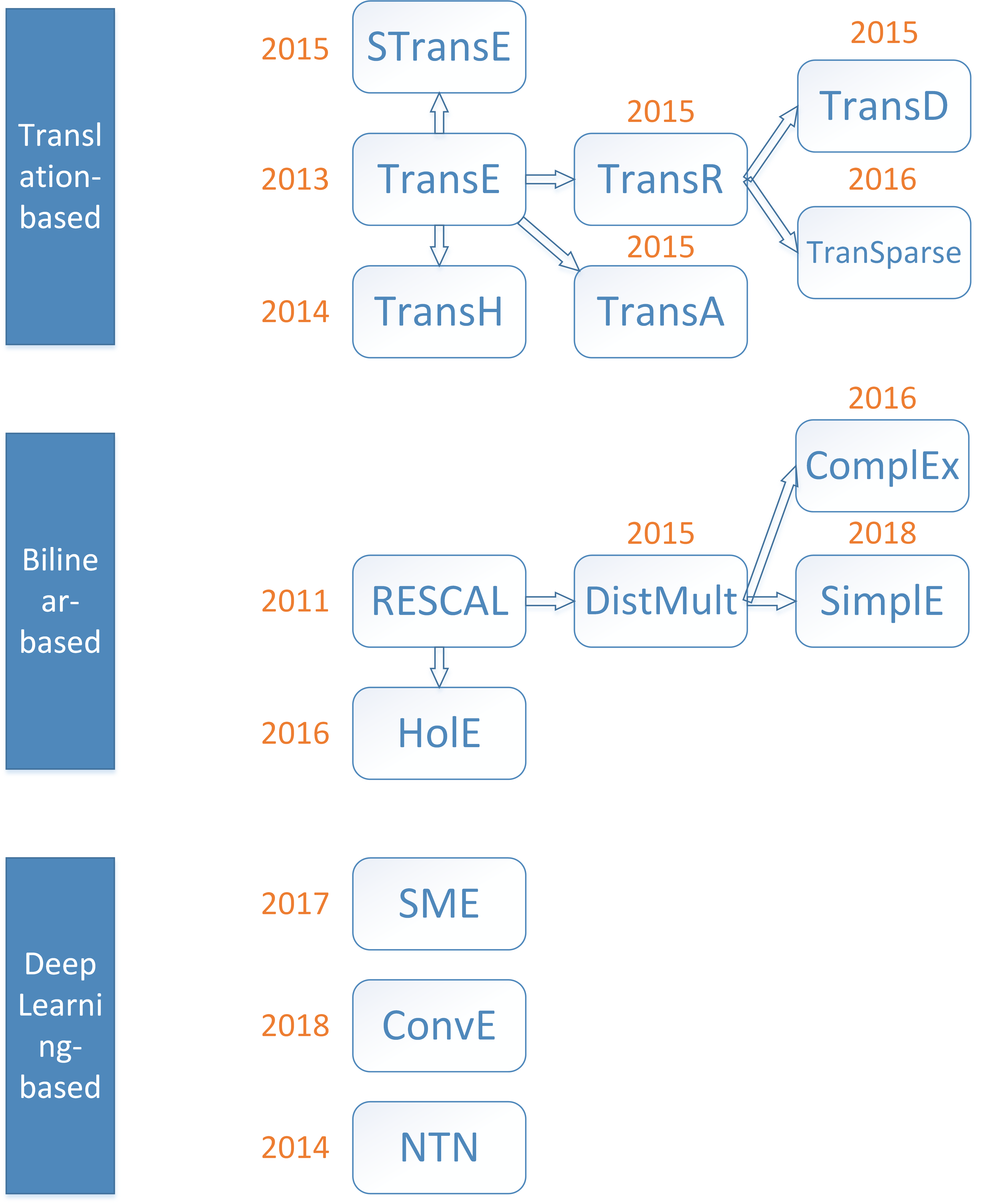

Decoders for static KGs: much more complex. Examples of each catelogue is shown in previous picture.

- Translation-based models

- Bilinearity-based models

- Neural network-based models

Encoders for static graph:

- High-Order Proximity Matrices: an extension of adjacency matrices

- Shallow Encoders

- Decomposition Approaches: its idea is that connected nodes are close to each other in the embedded space.

- Random Walk Approaches

- Autoencoder Approades:

- GCN Approaches

Encoders for static KGs: very few compared to static graph

- shallow encoders

- GCNs: such as R-GCN

Encoder-decoder framework for dynamic graph: (Note that no Encoders and Decoders of dynamic KGs are introduced in this survey paper).

Decoders for dynamic graph: I know nearly nothing about this.

- Time -predicting decoders

- Time-conditioned decoders

- Staleness

Encoders for dynamic graph:

- Aggregating Temporal Observations

- Aggregating Static Features

- Time as a Regularizer

- Decomposition-based Encoders

- Random Walk Encoders

- Sequence-Model Encoders

- Autoencoder-based Encoders

Applications, Datasets and Codes

Applications:

- Link prediction

- Entity/relation prediction

- Recommender Systems

- Time Prediction

- Node classfication

- Graph classification

- Network clustering

- Question/query answering

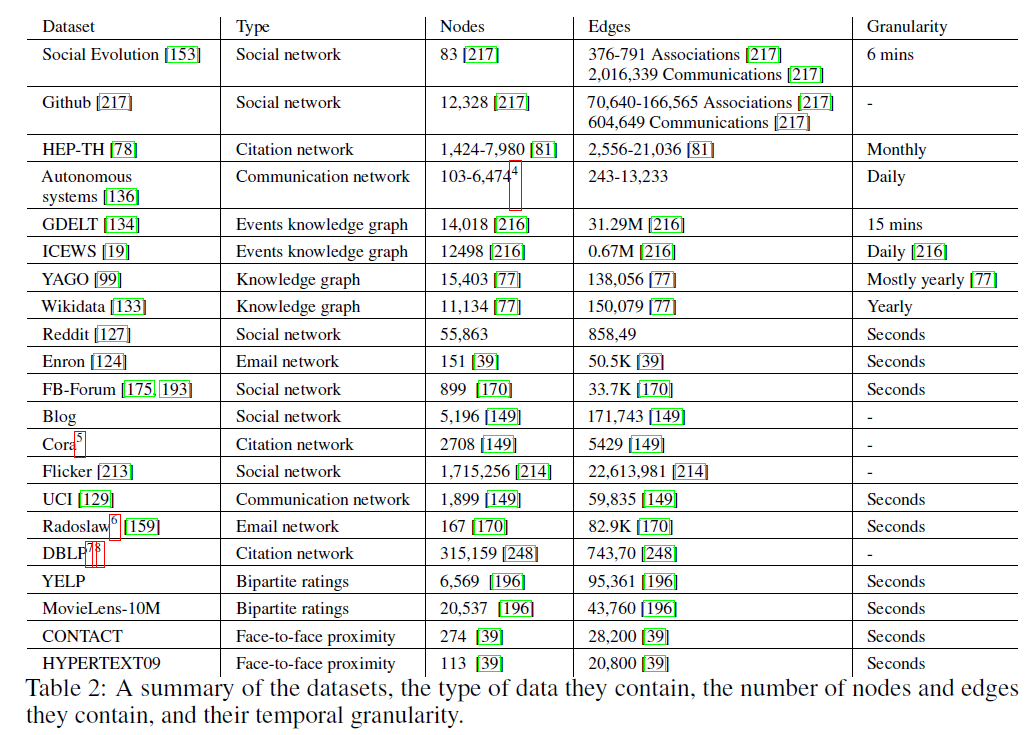

Datasets: A collection of datasets used for research on static and dynamic graphs.

Future Direction:

- The best way to extend the existing models for daynamic graph to KGs is still not clear!

- Currently representation learning algorithms have been mostly designed for \(DTDG\), with only few works on \(CTDG\).