VLLM Roadmap

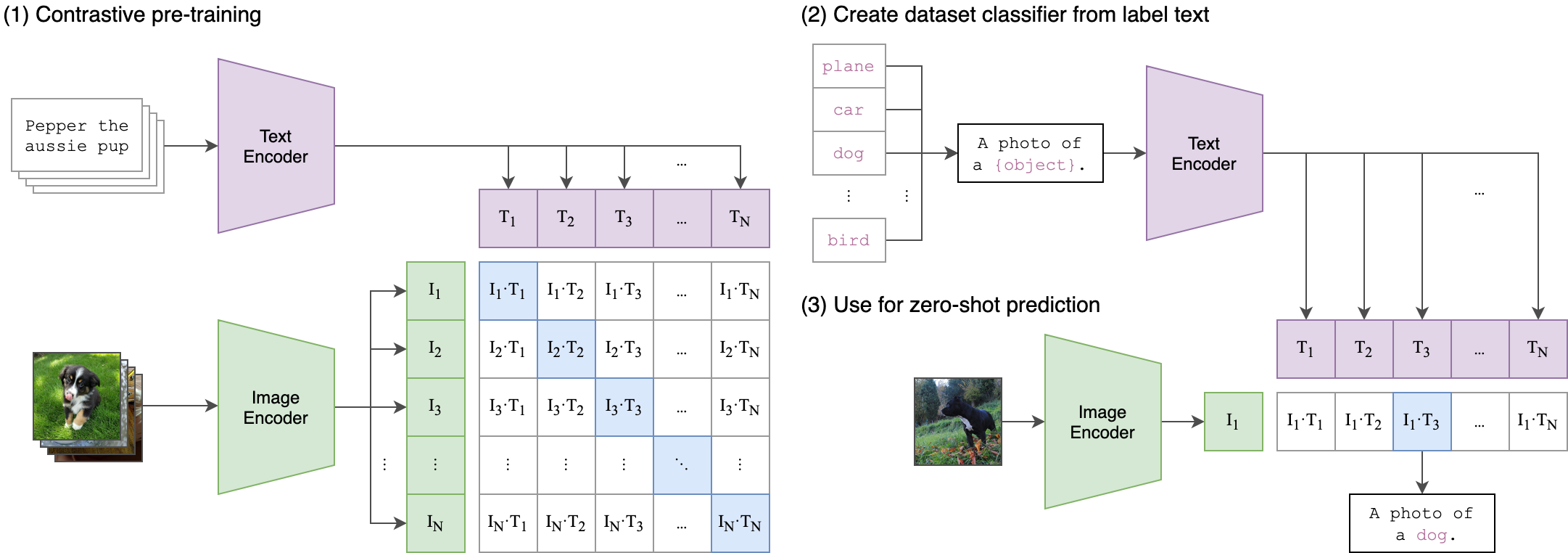

CLIP

publish year: 2021 author: OpenAI

Clip is a vision-language model that learns to match images and text by training on image-caption pairs (a custom large-scale dataset of internet image-text pairs.). It is encoder-based and has two independent encoders to encode text and image. For N pairs of (image, text), Clip first calculates the similarity score for each random pair \(sim(i,j)\) (i-th text, j-th image), then maximize the similarity score of the positive pair (true pair) and the rest negative pairs.

Detailed steps:

1. Cosine Similarity Matrix

Given a batch with \(N\) images and \(N\) texts, image embeddings is \(\left\{v_i\right\}_{i=1}^N\) and text embeddings is \(\left\{t_i\right\}_{i=1}^N\). The similarity score \(s_{ij}\) is the cosine similarity of the two embeddings.

2. Softmax Normalization (Turn Similarities into Probabilities)

For each image \(i\), apply softmax across all text similarities (row-wise), where \(p_{ij}\) is the probability of image \(i\) pairs with text \(j\). Also, do the same for texts (column-wise softmax), where \(q_{ij}\) is the probability of image \(i\) pairs with text \(j\).

3. Cross-Entropy Loss

Since each image \(i\) should only match text \(i\), and vice versa, the contrastive loss for images is \(L_{\text{image}} = \frac{1}{N} \sum_{i=1}^N (-\log p_{ii})\). And for texts: \(L_{\text{text}} = \frac{1}{N} \sum_{i=1}^N (-\log q_{ii})\).

The final CLIP loss is the average of both: \(L = \frac{1}{2} \left( L_{\text{image}} + L_{\text{text}} \right)\).

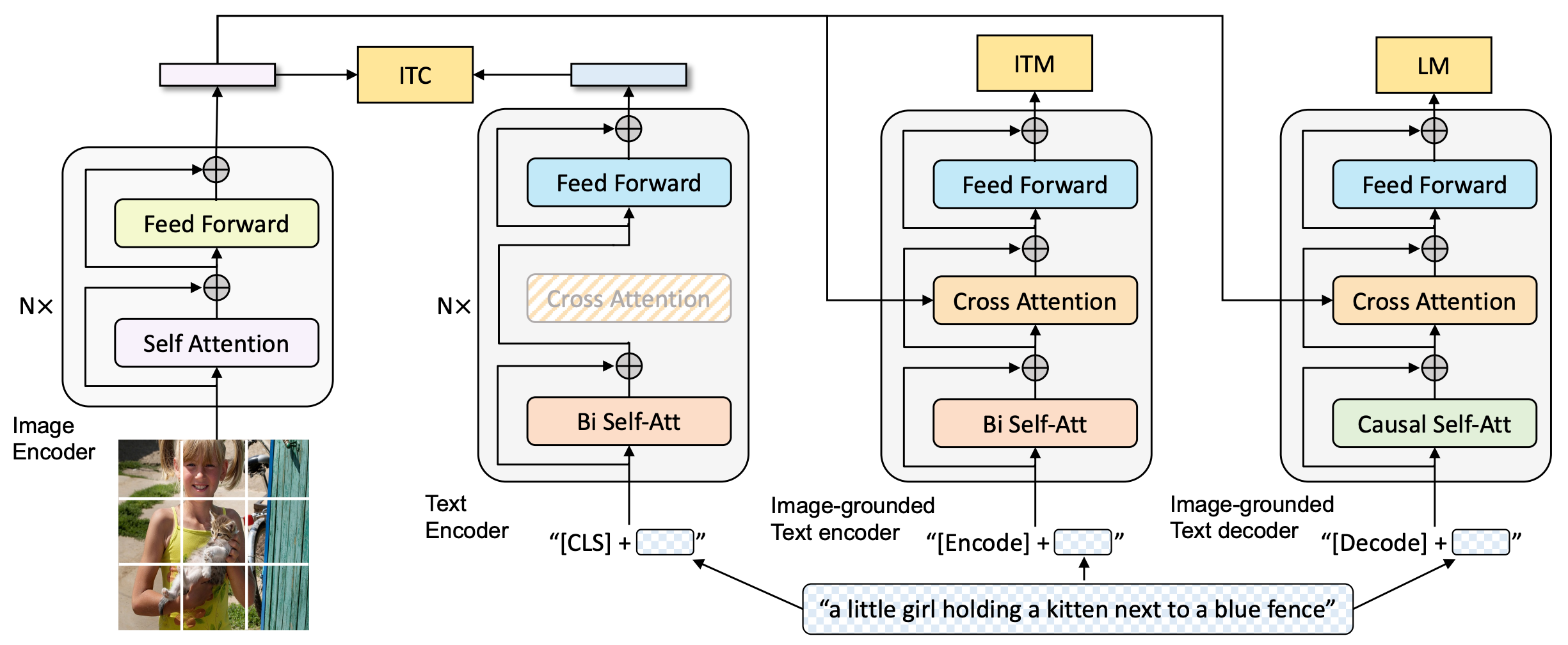

BLIP (Bootstrapping Language-Image Pre-training)

publish year: 2022 author: Salesforce

BLIP uses a unified vision-language encoder-decoder model. It is designed for multimodal understanding and generate text (captioning, VQA, retrieval, etc.)

Compared to CLIP's pure image-text pair training data, BLIP also generated captions for images (bootstrapping step). These generated captions are then reused as training data, improving alignment between images and text. This self-bootstrapping makes BLIP especially good at tasks like image captioning and visual reasoning.

LLaVA (Large Language and Vision Assistant)

publish year: 2023 author: University of Wisconsin-Madison, etc.

LLaVA is a vision-language model that combines:

- LLaMA (a powerful large language model)

- A vision encoder (e.g., CLIP’s image encoder)

- A simple projection layer to map the visual features into the text embedding space.

The core change: LLaVA modifies LLaMA by adding a simple vision pathway so LLaMA can process images + text together.

1. Added a Vision Encoder

LLaVA uses a pretrained CLIP Vision Transformer (ViT) to extract image features.

2. Added a Projection Layer (Align Vision with Language)

CLIP’s image embeddings live in CLIP’s embedding space. LLaMA’s token embeddings live in LLaMA’s space. LLaVA adds a small MLP projection layer to map image embeddings into the same space as LLaMA token embeddings. This projection allows the image to be represented as a sequence of special visual tokens that LLaMA can "read" like text tokens.

[Image tokens from vision encoder] + [Text prompt tokens]

3. Minor Positional Embedding Handling

LLaVA extends LLaMA’s position embeddings (or reuses the early ones) to handle these new visual tokens at the beginning.

4. The Rest

LLaVA does not change the attention layers, feedforward layers, etc., are identical to LLaMA. Most of the weights (other than the projection layer) are either copied directly from pretrained LLaMA or frozen.

Many times, when finetuning LLaVA, only MLP layer is finetuned.

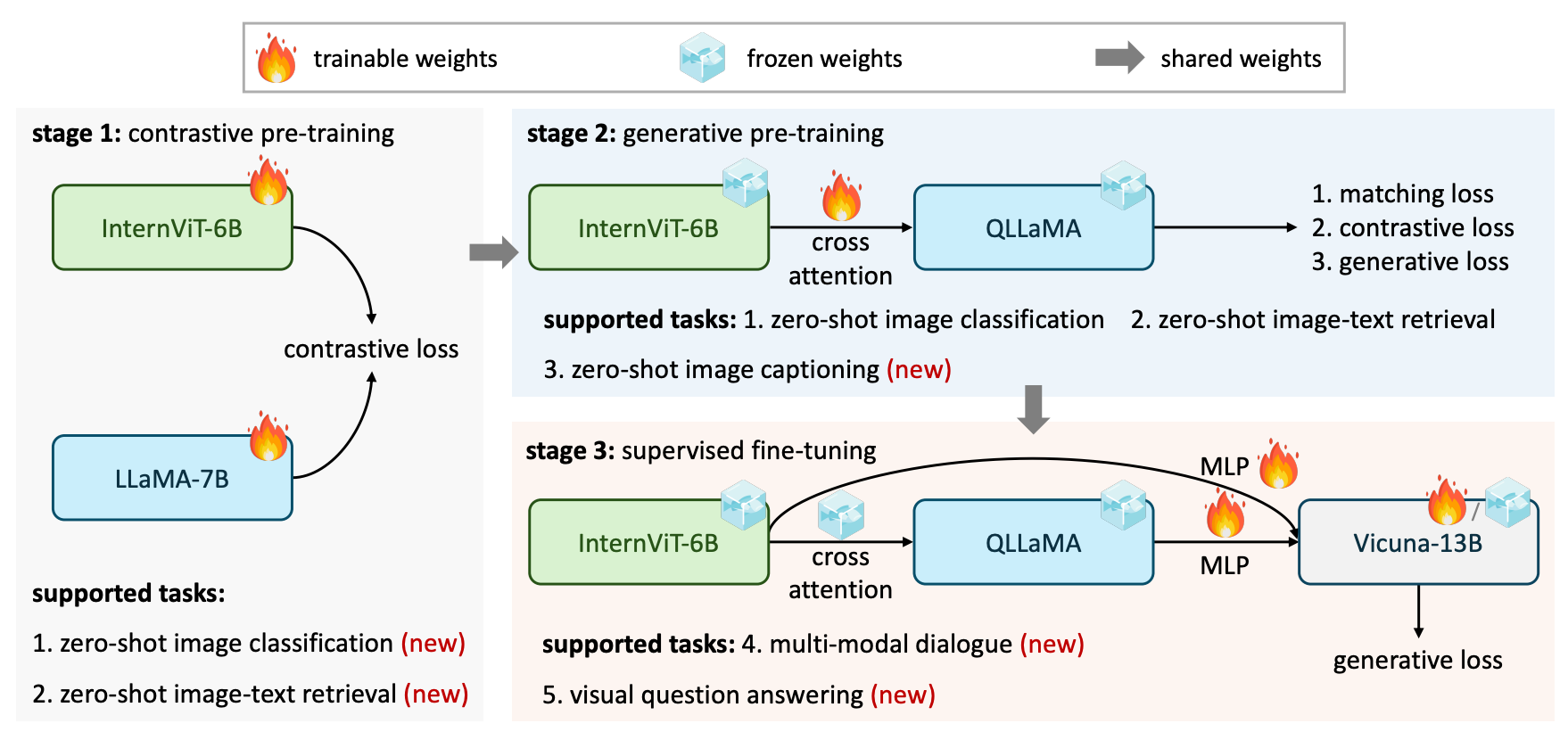

InternVL

publish year: 2023 author: Shanghai AI Lab

InternVL is a large-scale vision-language foundation model. It scales up the size of vision encoder to make it match the size of SOTA LLMs (Parameter-balanced vision and language components). Its training process is below, which consists of three progressive stages:

Stage 1: Vision-Language Contrastive Training

InternVL is a vanilla vision transformer. The training process is similar to CLIP, where the training data is large sets of image-text pairs. The objective function is the same with CLIP.

Stage 2: Vision-Language Generative Training

QLLaMA is Quantized LLaMA, which undergones quantization to reduce their size and improve efficiency. They keep both InternViT-6B and QLLaMA frozen and only train the newly added learnable queries and cross-attention layers with filtered, high-quality data.

Stage 3: Supervised Fine-tuning

They connect InternViT with an off-the-shelf LLM decoder (e.g., Vicuna or InternLM) through an MLP layer, and conduct supervised fine-tuning (SFT).