Incremental Representation Learning of KGs

Explore a novel method to embed new entities efficiently and effectively (2019)

This project is a Tencent-University Cooperation Program done in 2019.

Knowledge representation learning (KRL) aims at embedding entities and relations of knowledge graphs into a lowdimensional vector space, which has been used in a wide variety of knowledge-driven applications. Due to the continual growth of real-world knowledge, the demand for usable online learning models is quickly increasing. However, existing mainstream models for KRL are all trained on static knowledge graphs and cannot perform online learning when new data arrive frequently.

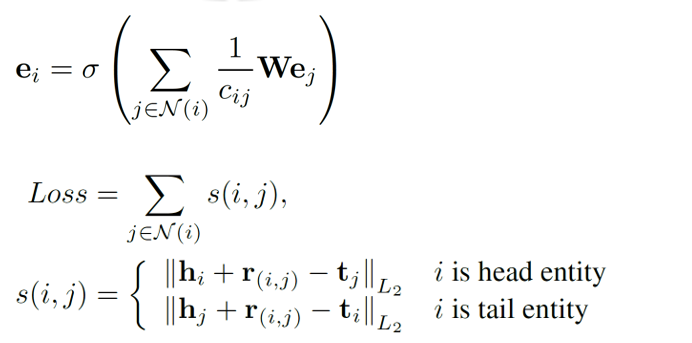

As an attempt to deal with this problem, inspired by the idea of graph convolutional networks (GCNs) which utilize the interactions between nodes and their neighbors to learn node representations, we design a novel model for learning embeddings of newly-added entities efficiently and effectively.

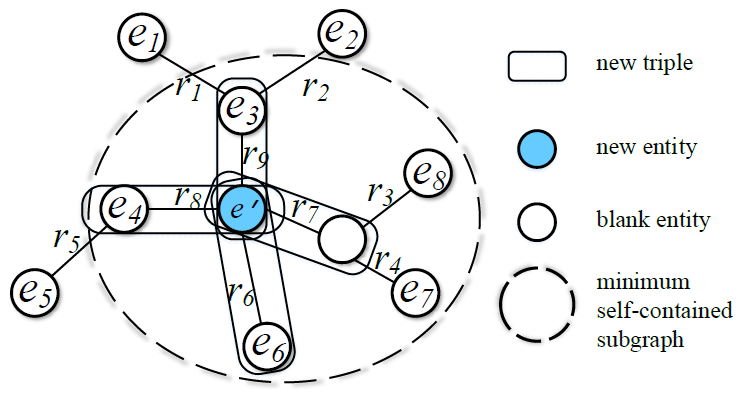

Specifically, when a new entity comes, we firstly construct a minimum self-contained subgraph for it, and then apply GCNs on this subgraph to learn representation of the new entity by aggregating information from its neighboring entities.

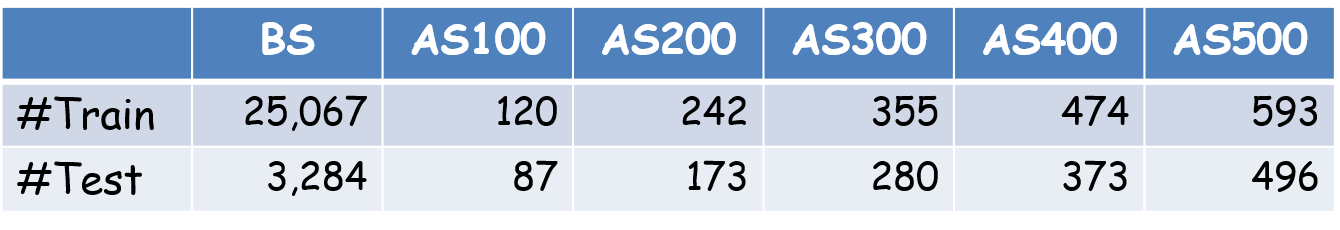

We evaluated our model on link prediction and time cost on a Tecent’s gaming KG. We constructed a base dataset (BS) and five batches of additional dataset (AS) to simulate the coming of new data. The dataset statistics is shown below.

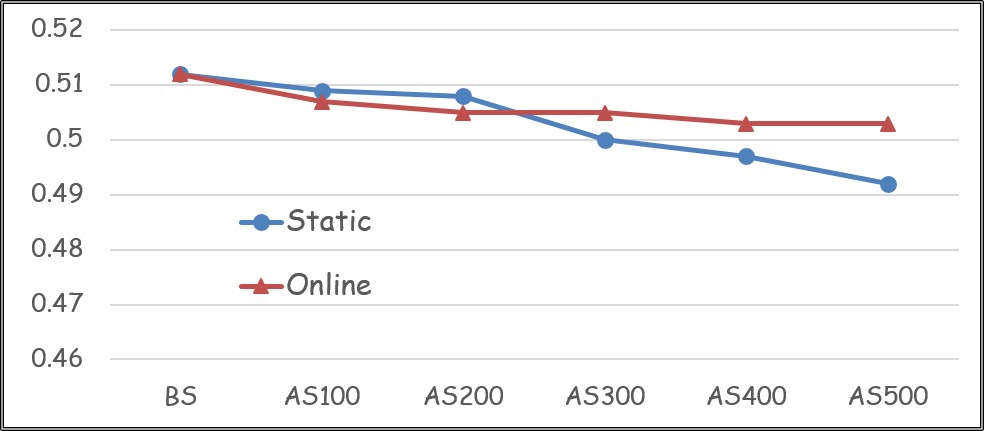

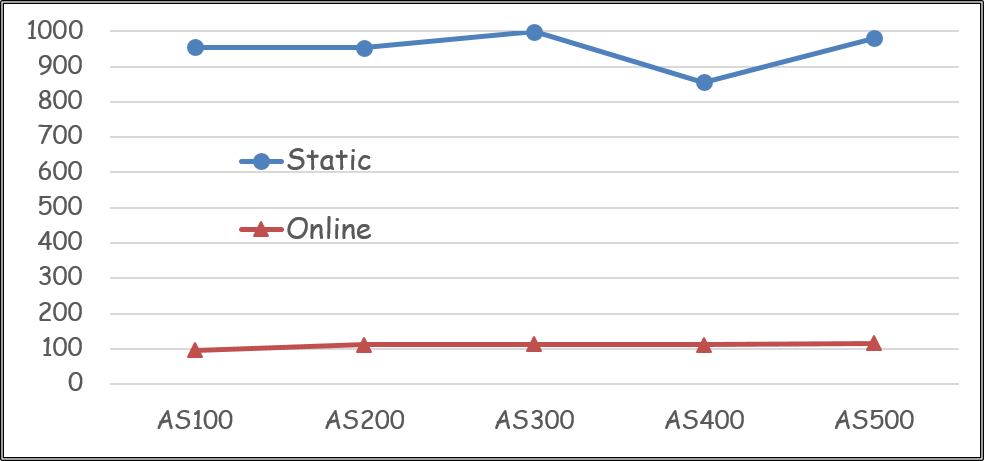

We name our method as online, and compare with a well-known static baseline TransE. The results of link prediction and time cost are shown below.

Overall, the experiments demonstrated the effectiveness and efficiency of our method in handling incremental KGs.

P.S. I am glad to be the program principle!